The Pathfinder Framework

1 NNF Quantum Computing Programme, Niels Bohr Institute, University of Copenhagen, Denmark

2 Quantum Foundry Copenhagen, Denmark

We present the framework and terminology adopted by the Novo Nordisk Foundation Quantum Computing Programme (NQCP) and the Quantum Foundry Copenhagen (QFC) to advance utility-scale quantum computing. The mission is motivated by the pursuit of solutions to otherwise intractable problems in materials and life sciences. The framework emphasizes interdisciplinary and cohesive teamwork under a common terminology, ensuring a mission- and data-driven focus while maintaining agility to accommodate disruptive innovations. Adapting the framework enables the prioritization of projects that most effectively accelerate progress in both quantum hardware and algorithms toward utility-scale fault tolerant quantum computing (FTQC).

The pathfinder framework consists of three interconnected sub-frameworks:

- The mission ladder – A framework that connects high-level targets to lower-level performance metrics, providing a structured approach

to guide and evaluate meaningful paths to utility scale quantum computing. - Mission-driven project management – An agile framework for coordinating mission-driven ‘inform-enable’ projects across multiple

teams. - Data-driven workflow framework – A framework for utilizing a unified database that support informed decision-making in large and

complex projects. The framework is designed to leverage supervised machine learning methods to accelerate the development process.

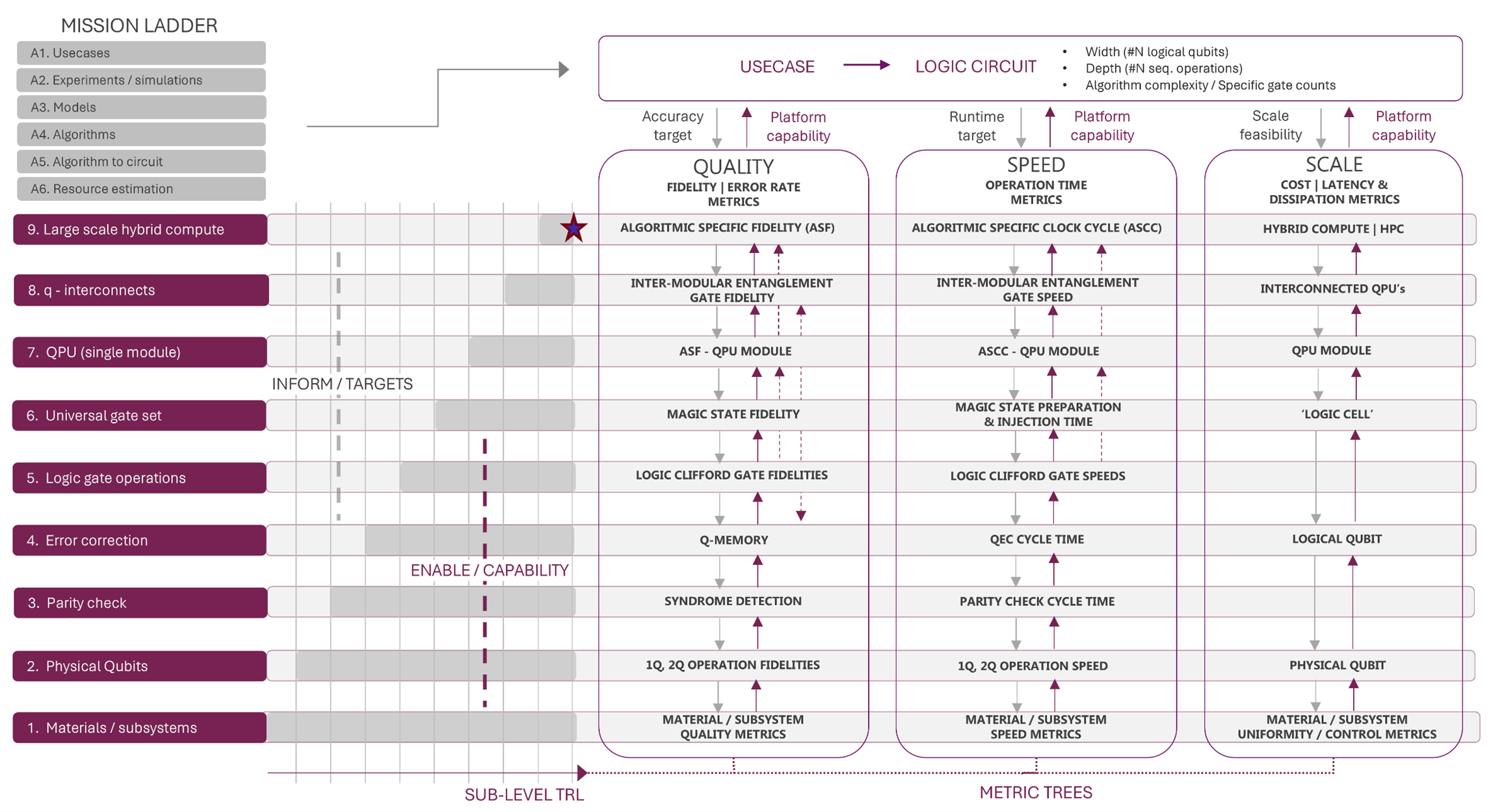

The mission ladder, see Figure 1, links the application & algorithms research (grey boxes) to hardware development (red boxes). The nine hardware technology readiness levels (TRL)i are designed to be broadly applicable to FTQC architectures, with lower levels forming the natural building blocks for higher ones. This framework supports “inform–enable” projects: theory and simulation efforts inform architectural FTQC hardware choices and define performance targets for quality, speed, and scalability, while engineering efforts enable progress through the realization of the required FTQC hardware.

Figure 1. The mission ladder connect the technology readiness levels needed for achieving utility scale FTQC. The usecase algorithm and resources for the corresponding logic circuit is estimated by the A&A team which leads to performance targets for the HW development. The quality, speed and scale pillars form the framework for ‘inform-enable’ projects via ‘metric trees’ that connect the critical metrics across the ladders.

Mission-driven project management. The core of the mission driven project framework is to identify the most critical dependencies between

higher and lower levels on the mission ladder. This enables mission driven decision making and setting priorities of projects. The inter-layer

dependencies will start as a hypothesis for framing the project but will be uncovered through analyzing correlations between metrics of interest (MOIs) – metrics that are measures of performance on the different levels of the quality, speed or scale ladders. The ladder with interdependent MOIs is referred to as metric trees (also called MOI trees).

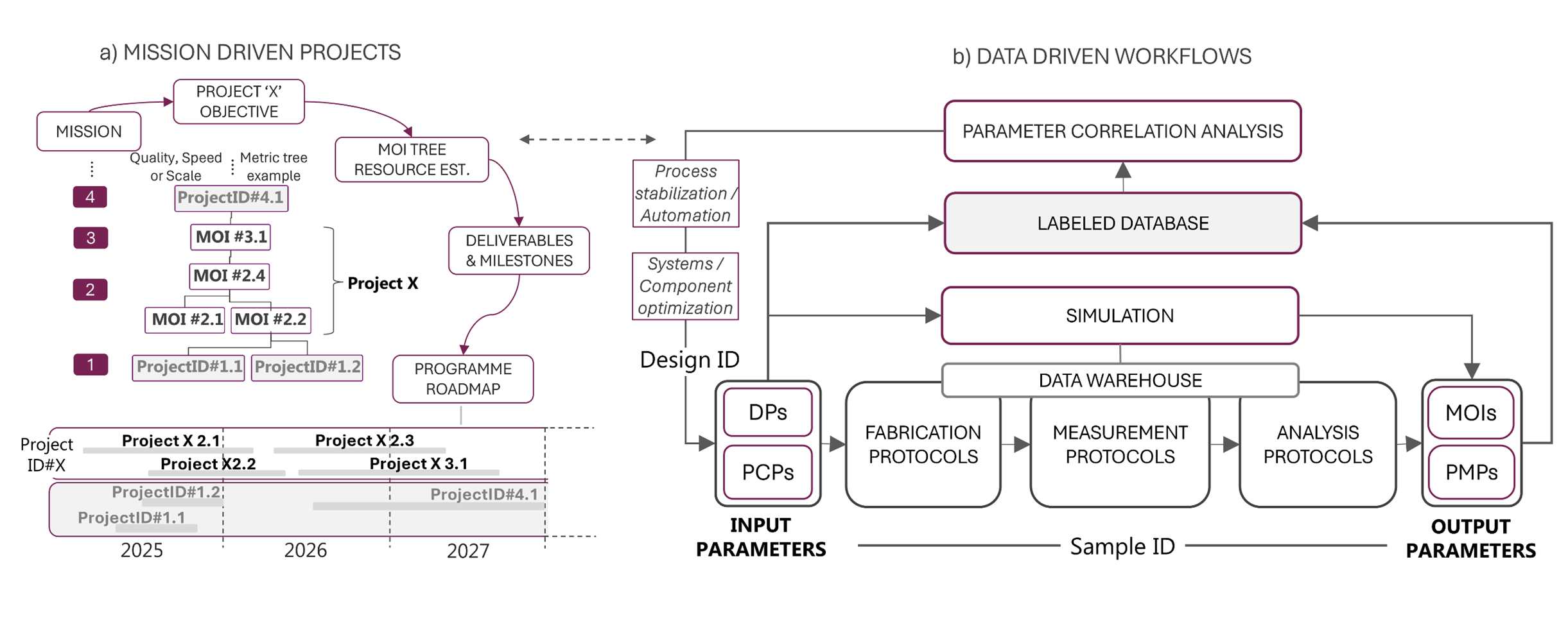

The mission driven project management begins with teamwork to frame the project and its priority, appointing a project manager and assigning individual contributors. For framing the project, as illustrated in Figure 2a, we recommend:

a) Define the project objective as a target for the project’s highest-level MOI.

b) Build a preliminary MOI tree that includes the levels relevant to the project, along with estimated targets for lower level MOIs that are assumed to be critical for achieving the milestone.

c) Link the targets to deliverables on a shared platform roadmap.

We emphasize the importance of agile project management, with targets and deliverables updated as new data emerges, and projects discontinued when risk assessments indicate they are no longer viable.

Note: Sub-levels may exist within each of the main levels on the mission ladder. For example, achieving targets for qubit coherence times (MOI #2.1, 2.2) may enable achieving targets for gate fidelities (MOI #2.4) within level 2.

Figure 2. a) Mission-driven projects begin with defining the project objective and setting targets for the MOIs within the MOI trees. These targets form the basis for milestones and deliverables, which are then consolidated into a unified roadmap to ensure program-wide visibility of dependencies. b) A well-defined project also requires a set of input parameters for hardware simulation, fabrication, and measurement protocols. All raw output data is stored in a centralized data warehouse, while all parameters are maintained in a labeled database to support mission- and data-driven R&D. A tight connection between mission-driven project management and data-driven workflows enables an agile R&D process that can continuously adjust deliverables as new insights emerge.

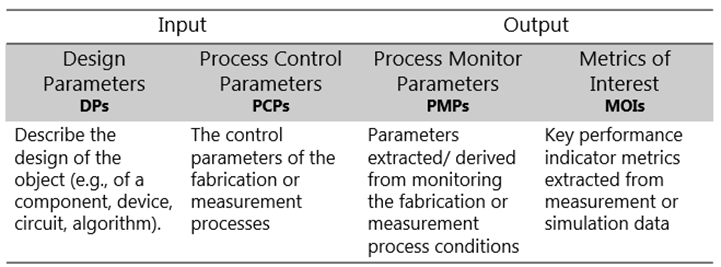

Data driven workflows. A mission-driven project initiates a data-driven workflow ideally starting from a parameterized design based on a set of design parameters (DPs). The other class of input parameters, process control parameters (PCPs) that set the control points on the experimental setups, specify fabrication and measurement protocols. Measurements, whether acquired during fabrication monitoring, post-fabrication characterization or quantum computing operations, generate raw output data which are stored in the common data warehouse for easy visualization and analysis. From this data, process monitor parameters (PMPs) and metrics of interest (MOIs) which are discrete representative parameters of the measurement, are extracted using version-controlled analysis protocols and entered, alongside simulated MOIs, into the labeled database.

The labeled database ensures transparency across workstreams and supports efficient system-level engineering with the four parameter classes forming the basis for mission and data driven R&D. Examples can be root cause analysis of process variability or performance optimization of MOIs through correlation/regression analysis and supervised machine learning.

High-quality parameter generation is critical for efficient performance optimization and is ensured through high level of process control. To evaluate process control, we examine MOI variability within and across experiments. MOI–PMP correlations may identify sources of variability which can be mitigated through process automation. This can be real-time and closed-loop adjustment of control points based on measurements of a process monitor. For full automation, this makes the process monitor become a control parameter and therefore work as setpoint for a PCP. Performance optimization is carried out through design-of-experiments approaches, in which critical DPs and PCPs are systematically varied to refine MOI outcomes via DP/PCP–MOI correlations. Because high-level MOIs are typically more resource-intensive, with longer process turnaround times, a central focus is to establish MOI-MOI correlations with lower-level MOIs that enable faster optimization cycles.

Figure 3. Four main parameter classes which is logged as discrete and representative parameters for pathfinding using the labeled database.

For more details on the programme see NQCP – University of Copenhagen and Quantum Foundry Copenhagen

"Cite as: NQCP & QFC, The pathfinder framework (2025). URL: nqcp.ku.dk/pathfinder-framework, DOI: doi.org/10.5281/zenodo.17159625

* Corresponding author: Peter Krogstrup, krogstrup@nbi.dk